Books / Fundamentals of Computer Science / Chapter 10

Analysis of Algorithms

Analysis of algorithms is the study of how algorithms behave when they are executed. One of the most important characteristics of any algorithm is how long the algorithm will require to complete when run on an input of a particular size. In particular, we would like to know how the running time of an algorithm increases as the input size increases.

Implementing ArrayList

As a concrete example, let’s consider an implementation of the generic ArrayList class. An ArrayList uses an array of references as its internal storage. The methods of the ArrayList class use this array to store the elements added to the object. When a call to the add method is made while the array is full, a larger array must be allocated, and the original elements copied from the old storage array to the new storage array. Once this has been done, the old storage array is discarded, and the new storage array becomes the ArrayList’s storage.

public class ArrayList<E> {

private static final int INITIAL_CAPACITY = 4;

private E[] storage;

private int numElements;

public ArrayList() {

this.storage = (E[]) new Object[INITIAL_CAPACITY];

this.numElements = 0;

}

public int size() {

return numElements;

}

public void add(E value) {

if (numElements >= storage.length) {

grow();

}

storage[numElements] = value;

numElements++;

}

public E get(int index) {

if (index < 0 || index >= numElements) {

throw new IndexOutOfBoundsException();

}

return storage[index];

}

public void set(int index, E value) {

if (index < 0 || index >= numElements) {

throw new IndexOutOfBoundsException();

}

storage[index] = value;

}

private void grow() {

E[] larger = (E[]) new Object[numElements * 2];

for (int i = 0; i < storage.length; i++) {

larger[i] = storage[i];

}

storage = larger;

}

}The basic idea is that as calls to the add method are made, the added element values are stored in the first unused element of the storage array. When the current storage array becomes full, a new (larger) one is allocated by the private grow method.

The get and set methods simply retrieve elements from and store elements to elements of the storage array.

Some tests:

import junit.framework.TestCase;

public class ArrayListTest extends TestCase {

private ArrayList<Integer> empty;

private ArrayList<String> names;

protected void setUp() throws Exception {

empty = new ArrayList<Integer>();

names = new ArrayList<String>();

names.add("Alice");

names.add("Bob");

names.add("Carl");

names.add("Delores");

}

public void testSize() throws Exception {

assertEquals(0, empty.size());

assertEquals(4, names.size());

}

public void testAdd() throws Exception {

names.add("Eli");

assertEquals(5, names.size());

assertEquals("Eli", names.get(4));

// make sure the previous values are still there

assertEquals("Alice", names.get(0));

assertEquals("Bob", names.get(1));

assertEquals("Carl", names.get(2));

assertEquals("Delores", names.get(3));

}

public void testSet() throws Exception {

names.set(2, "Cornelius");

assertEquals("Cornelius", names.get(2));

}

}Note that because the initial capacity of the storage array is 4, when testAdd adds a fifth element, the storage array is re-allocated.

Implementing remove(int)

The remove method takes an integer index, and removes the element at that index from the ArrayList. Any elements to the right of the index must be moved over one position to the left. The removed element is returned.

Implementation:

public E remove(int index) {

E value = get(index);

for (int i = index + 1; i < size(); i++) {

set(i - 1, get(i));

}

numElements--;

return value;

}And a test:

public void testRemove() throws Exception {

String gone = names.remove(1);

assertEquals("Bob", gone);

assertEquals(3, names.size());

assertEquals("Alice", names.get(0));

assertEquals("Carl", names.get(1));

assertEquals("Delores", names.get(2));

}Analysis of the remove algorithm

We can think of the remove method as implementing an algorithm: the ArrayList remove algorithm.

When the program calls the remove method, how long will it take to complete? This depends on a wide variety of factors:

- how many elements are in the ArrayList

- which element is being removed

- how fast the computer is

- how good the Java Virtual Machine’s Just-In-Time compiler is

- whether or not the ArrayList and its storage array currently reside in the CPU cache

- etc., etc.

Because of the number of factors involved, this question cannot be answered precisely.

We can still usefully characterize the performance of the underlying algorithm by removing from consideration all of the incidental factors (how fast the CPU is, etc.) and focusing on the mathematical parameters of the algorithm. We have two such parameters:

- the number of elements in the ArrayList

- the index of the element being removed

So, based on these parameters, how can we characterize the running time of the algorithm? The key is that we will count the number of constant-time steps the algorithm will take. A constant-time step is simply some sequence of operations that is guaranteed to complete in a constant amount of time; any finite sequence of instructions is a constant-time step.

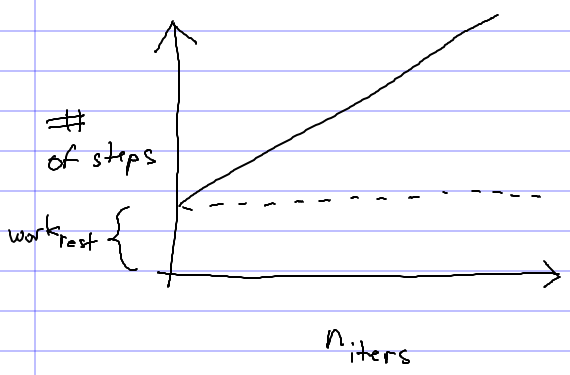

Looking at the code of the remove method, we notice that the only construct which might not complete in a constant amount of time is the loop. We also note that the amount of work outside the loop is constant, and the amount of work done inside one iteration of the loop is constant. So, we should expect the amount of time it takes the method to complete to be

(niters ⋅ workloop) + workrest

where niters is the number of loop iterations, workloop is the (constant) amount of work done per loop iteration, and workrest is the (constant) amount of work done outside the loop. We can thus think of the running time of the algorithm as being a function of niters, the number of iterations executed by the loop.

If we plot this function, it looks like this:

As niters becomes large, the significance of workrest diminishes. So, we say that the running time of the algorithm is linear with respect to niters. This is just a fancy way of saying that the running time (as niters becomes sufficiently large) is proportional to the number of times the loop executes.

So, what is niters? It is a function of two parameters:

niters = (n - 1) - i

where n is the number of elements in the ArrayList, and i is the index of the element being removed.

So, when we call remove on an ArrayList with n/i elements, it will take a constant amount of time to remove the last element, and time proportional to n - 1 to remove the first element. This makes sense: more work is required to shift over the elements to the right of the one we’re removing the closer the removed element is to the beginning of the array.

Worst case

Typically, what we want to know when analyzing the running time of an algorithm is the worst-case running time; what is the maximum number of steps that will be required for the algorithm to complete. For the ArrayList remove algorithm, the worst case is removing the first element, which (as mentioned above) requires time proportional to

n - 1

ignoring workrest.

Average case

Sometimes, we might be interested in knowing what the average-case running time is. To say something meaningful about the average case, we need to know what possible inputs the algorithm might receive, and a probability distribution specifying how likely each possible input is. For the case of the ArrayList remove algorithm, the possible inputs are the index values 0 .. n-1. Let’s say that all inputs are equally likely. That means the average case running time will be

((n-1) + (n-2) + … + 1 + 0) / n

ignoring workrest.

The sum of the series

(n-1) + (n-2) + … + 1 + 0

is

(n / 2) ⋅ n

[Why? Google “gauss sum integers”.]

So, the average case running time works out to

((n / 2) ⋅ n) / n

which simplifies to n / 2.

Analysis of Algorithms

Algorithm analysis refers to examining an algorithm and determining, as a function of the size of its input, how many steps the algorithm will take to complete.

General rules:

-

a program statement that is not a method call: 1 step

-

loop executing n iterations: n * m, where m is the cost of a single loop iteration (NOTE: m may be a function of which loop iteration is being executed)

-

method call: however many steps the method body will take given the arguments passed to the method

Usually, we are interested in the worst case: the absolute upper limit for how many steps the algorithm will take. Sometimes we may be interested in the average case, although what constitutes the average case can be difficult to define and complicated to analyze. The worst case is usually fairly easy to figure out, and algorithms with good worst case behavior give us confidence that our program will run efficiently no matter what input we give it.

A simple example: a linear search for an array element matching a specified value:

public static<E> int findElement(E[] array, E element) {

for (int i = 0; i < array.length; i++) {

if (array[i].equals(element)) {

return i;

}

}

return -1;

}In the worst case (the array doesn’t contain the element we’re looking for), the loop will execute once for each element of the array. Each loop iteration executes 1 statement (the if). So, the worst case running time of this algorithm is

N + 1

where N is the length of the array. (We tacked on an extra step for the return statement at the end.)

A more complicated case: finding out whether or not an array contains duplicate elements:

public static<E> boolean containsDuplicates(E[] array) {

for (int i = 0; i < array.length; i++) {

E element = array[i];

for (int j = i + 1; j < array.length; j++) {

E other = array[j];

if (element.equals(other)) {

return true;

}

}

}

return false;

}This algorithm is harder to analysis because the number of iterations of the inner loop is determined by which iteration of the outer loop is executing.

It is clear that the in the worst case, the outer loop will execute once for each element of the array. The inner loop executes once for each element of the array past element i. We’ll say that the inner loop executes two statements, and that one statement executes before the inner loop (element = array[i]). So, as a series, the number of steps performed by the nested loops together is something like:

= (1 + 2(N-1)) + (1 + 2(N-2)) + … + (1 + 2(1)) + (1 + 2(0)) = N + 2(N * (N/2)) = N + N2

(Recall that the sum of the series 1 + 2 + … + N-2 + N-1 is N*N/2.)

Tacking on an extra step for the final return statement and putting the terms in canonical order, we get a worst case cost of

N2 + N + 1

where N is the length of the array.

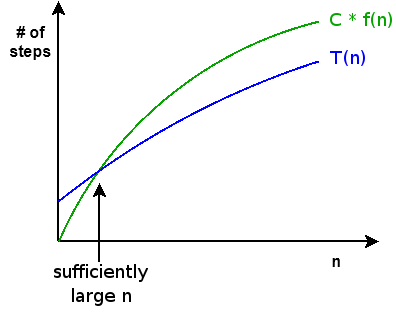

Big-O

In analyzing an algorithm, we are generally interested in its growth as N increases, rather than an exact number of steps. Big-O refers to characterizing the growth of the exact cost T(n) of an algorithm in relation to a simpler function f(n). Specifically, the exact cost T(n) of an algorithm is O(f(n)) iff

There exists some constant C such that C * f(n) >= T(n) for all sufficiently large values of n

Visually, f(n) is an upper bound for T(n) once we reach some sufficiently large value of n:

Finding the big-O bound for an algorithm is really easy, once you know its exact cost:

- Discard all terms except for the high order term

- Discard all constants

You can find the high order term according to the following inequalities (assume k > 1, j > k):

1 < log n < n1/k < n < nk < nj < kn

So, our algorithm to search an array for a specified element is O(N), and the algorithm to determine whether or not an array has duplicate elements is O(N2). In the second case, we had both N and N2 terms, but N2 dominates N.

Playing fast and loose

One of the nice things about analysis using big-O is that you can immediately drop low order terms. For example, it is perfectly valid to say things like:

That inner loop is O(N2), and it executes in an outer loop that is O(N). So, the entire algorithm is O(N3).

Big-O example problems

First problem

In the following method, let the problem size N be rowsCols, which is the number of rows and columns in the square two-dimensional array matrix.

public static boolean isUpperTriangular(double[][] matrix, int rowsCols) {

for (int j = 1; j < rowsCols; j++) {

for (int i = 0; i < j; i++) {

if (matrix[j][i] != 0.0) {

return false;

}

}

}

return true;

}As a function of the problem size N, what is the big-O upper bound on the worst-case running time of this method?

Also: would our answer be different if N was the number of elements in matrix?

Second problem

Assume that, in the following problem, a and b are both square two-dimensional arrays (same number of rows and columns). Let the problem size N be the number of rows and columns.

public static double[][] matrixMult(double[][] a, double[][] b) {

if (a[0].length != b.length) {

throw new IllegalArgumentException();

}

int resultRows = a.length;

int resultCols = b[0].length;

double[][] result = new double[resultRows][resultCols];

for (int j = 0; j < resultRows; j++) {

for (int i = 0; i < resultCols; i++) {

// compute dot product of row j in a and col i in b

double sum = 0;

for (int k = 0; k < b.length; k++) {

sum += a[j][k] * b[k][i];

}

result[j][i] = sum;

}

}

return result;

}As a function of the problem size N, what is the big-O upper bound on the worst-case running time of this method?

Again: would the answer be different if N was the number of elements in the two arrays?

Third problem

Assume that, in the following algorithm, the problem size N is the number of elements in the array.

// Return the index of the element of the array which is equal to

// searchVal. Returns -1 if the array does not contain

// any element equal to searchVal.

// Important: the array must be in sorted order for

// this algorithm to work.

public static<E extends Comparable<E>>

int binarySearch(E[] arr, E searchVal) {

int min = 0, max = arr.length;

while (min < max) {

int mid = min + (max-min)/2;

int cmp = arr[mid].compareTo(searchVal);

if (cmp == 0) {

return mid;

} else if (cmp < 0) {

max = mid;

} else {

min = mid + 1;

}

}

return -1;

}As a function of the problem size N, what is the big-O upper bound on the worst-case running time of this method?